Filmmaker explores dangers of advanced artificial intelligence

Posted by Elena del Valle on March 4, 2016

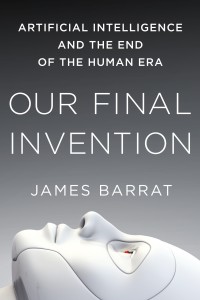

Our Final Invention

Photos: book cover courtesy of St. Martin’s Press, and author photo courtesy of Ruth Lynn Miller

Filmmaker James Barrat was stunned when he stumbled on an issue he found so complex and important that he felt it necessary to write about it rather than produce a documentary. Four years later his book, Our Final Invention: Artificial Intelligence and the End of the Human Era (St. Martin’s Press, $16.99), was published. He is now keen to spread the word about the Artificial Intelligence (AI) issue further through a film, provided he finds funding.

“The AI Risk issue struck me as hugely important and all but unknown,” the author said by email. “There were no books or films about it, and the only people discussing it were technologists and philosophers. They were working hard on solving the problem, but not on publicizing it. As a documentary filmmaker, I bring complex scientific and historical subjects to large audiences. That’s my expertise. So I felt uniquely positioned, even duty-bound, to spread the word. I wrote a book rather than make a film because even simplified AI Risk is a complex subject. An average hour-long documentary film contains just 5,000 words. I knew I could do a better job at bringing this subject to a wide audience with a book of 80,000 words.”

The softcover book, his first, is divided into an Introduction and 16 chapters. He wrote it for the general public convinced everyone should become award of AI issues. In the book, he says he believes AGI, an advanced super intelligence, could arise from Wall Street. That while an intelligence explosion might be missed by the average person, the secretive environment on Wall Street lends itself to the development of such technology. He also believes the developers of artificial intelligence may posses the same lack of moral fiber as the oft maligned financial executives who have repeatedly misbehaved without intense regulation.

“Artificial Intelligence is the science and study of creating machines that perform functions normally performed by human intelligence,” he said when asked to define the concepts for non scientists. “These include the whole range of human cognitive abilities: logical reasoning, navigation, object recognition, language processing, theorem proving, learning, and much more. AGI is Artificial General Intelligence, or machine intelligence at roughly human level, in all its domains. ASI is Artificial Super Intelligence, or machine intelligence at greater than human level. We’re probably no more than two decades away from creating AGI. Shortly after that we’ll share the planet with ASIs that are thousands or millions of times more intelligent than we are. My book asks ‘Can we survive?’”

Where is the dividing line between an operating system and artificial intelligence? He describes an operating system as “simply the interface between you, a human, and the parts of the computer that process information. For example, Apple’s OS is functional computer window dressing that allows you to perform useful jobs with the computer’s processors, memory and other hardware. The OS isn’t intelligent.” Instead, Artificial Intelligence is a computer program or linked programs that perform acts of human-like intelligence in very narrow domains, such as search and navigation.

When asked if it is necessary for an entity to be self aware in order for it to be artificial intelligence, he said, “Artificial Intelligence is all around us – in our phones, our cars, our homes. It’s not self aware in any important sense. However, self-awareness may be necessary for AGI. In our own intelligence, self-awareness plays a large part. Our awareness of our bodies and minds and our environment impacts how we perform tasks, achieve goals, and learn. It remains to be seen if computers can emulate our intelligent behavior without self awareness. It may be possible. Probably it’ll be necessary for the computer to have some kind of self-awareness, for example, a mathematical model of itself and its environment.

But will it have anything like real consciousness? Will it know it exists? Good questions. I believe we’ll create wildly intelligent aliens. That is, they’ll be super effective at intelligent tasks, but they’ll perform them differently than we do, and they won’t possess an inner life anything like ours. For example, an airplane doesn’t fly like a bird or have other qualities of a bird, but it accomplishes the same essential feat much faster and for much longer, under harsher conditions. In the same way, a superintelligent machine will outperform us in every cognitive dimension. But it won’t have our mammalian evolutionary inheritance of empathy, or love. It won’t have an inner life at all unless we program one in. And we don’t have any idea how to do that.”

Click to buy Our Final Invention